What I did

Role:

Product DesignerDate:

March 2019 - May 2019Location:

Carnegie Mellon University

I created a productivity robot that was an add on to the Amazon Echo Dot 2. I wanted to leverage current technology and create a more approachable design with a physical touch point.

This design went through many iterations as I practiced working on the theories of animism and interaction design. Through this process, I was able to hone my sketching, CAD, coding, and circuits skills.

The Process

I first tested to see the kinds of interactions that I wanted to create and the added functionality I wanted this robot ot have. Given that this robot would be the physical touch point for the Amazon Alexa, I wanted to understand what would be most helpful. To do this, I mapped out my day and figured out when I needed data. After figuring that out, I sketched a few of the key interactions.

Testing the Sounds

The ultrasonic sensor was utilized to detect if a person is in the area. This way, the robot could interact with the person when they were around. However, this was removed in future iterations as

Various emotional responses based on sounds

The Basic Schematic of the first iteration

Testing the Eyes

I wanted to create the expressions through eyes and use the eyes to convey information. Below are a few of the expressions that the robot was able to make.

The weather icons were also used to create the expressions. These were based off of icons found on flaticon.com. Since the size of the screen was so small, modified these icons a little bit in order to be able to make sure that the icon kept its shape.

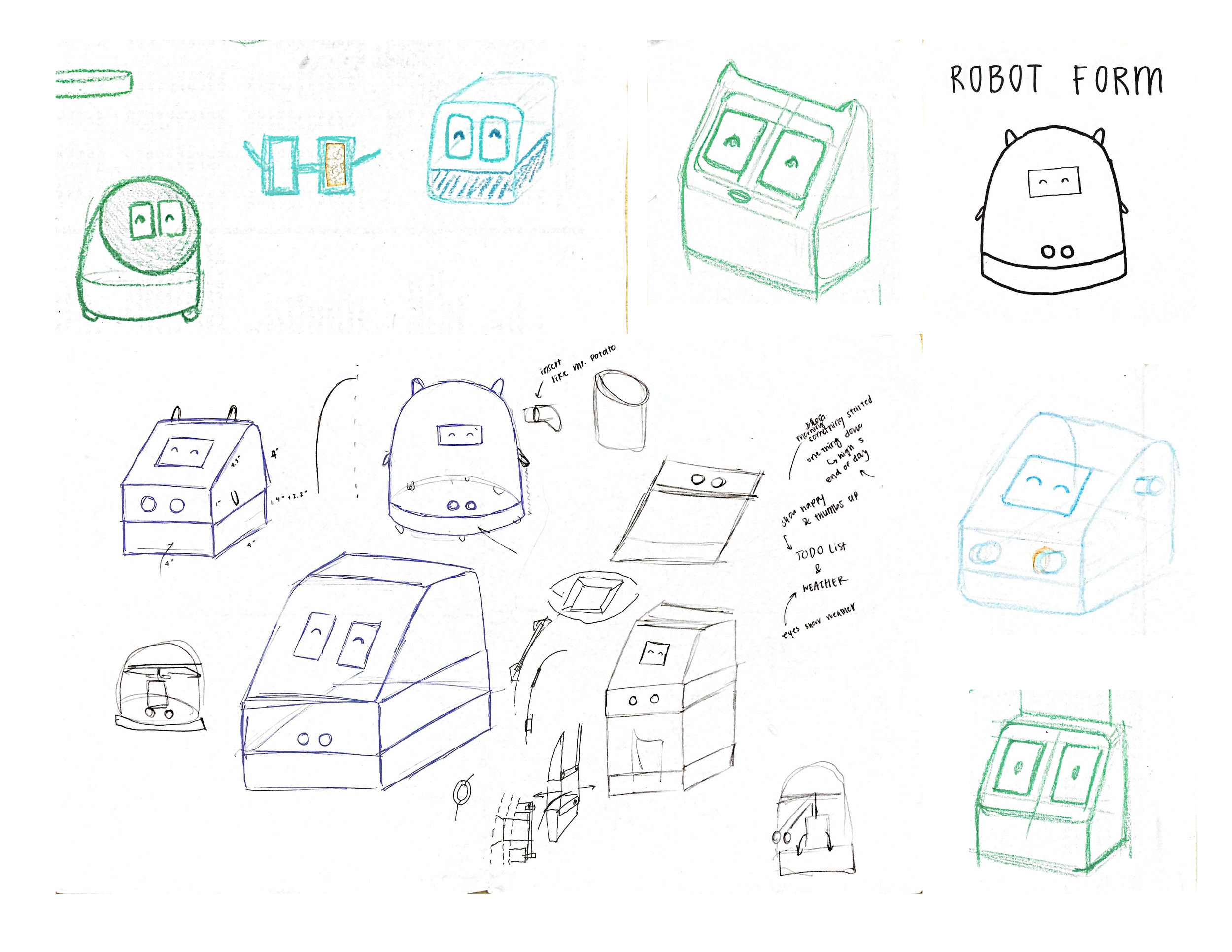

For this project, I decided to focus on the form of the robot. I sketched a variety of different forms for the robot. I tried various shapes in order to convey various interactions. I spent a lot of time trying to figure out what form would help best communicate the interactions that I wanted. Hashika Jian and I did a brainstorming session where we sketched the following designs.